Introduction

For many companies, email has become a more important communication tool than the telephone. Internal employee communication, vendor and partner communication, email integration with business applications, collaboration using shared documents and schedules, and the ability to capture and archive key business interactions all contribute to the increasing reliance on email.

Businesses of all sizes, from multinational enterprises to small and midsize businesses, are using the messaging and collaboration features of Microsoft Exchange to run business functions that if lost, for even a short amount of time, can result in severe business disruption. No wonder Exchange has become a critical application for so many businesses. When these businesses look at high availability solutions to protect key business applications, Exchange is often the first application targeted for protection.

Improving the availability of Exchange involves reducing or eliminating the many potential causes of downtime. Planned downtime is less disruptive since it can be scheduled for nights or weekends - when user activity is much lower. Unplanned downtime, on the other hand, tends to occur at the worst possible times and can impact the business severely. Unplanned downtime can have many causes including hardware failures, software failures, operator errors, data loss or corruption, and site outages. To successfully protect Exchange you need to ensure that no single point of failure can render Exchange servers, storage or network unavailable. This article explains how to identify your failure risk points and highlights industry best practices to reduce or eliminate them, depending on your organization’s Exchange availability needs, resources and budget.

Exchange Availability Options

Most availability products for Exchange fall into one of three categories: traditional failover clusters, virtualization clusters and data replication. Some solutions combine elements of both clustering and data replication; however, there is no single solution that can address all possible causes of downtime. Traditional and virtualization clusters both rely on shared storage and the ability to run applications on an alternate server if the primary server fails or requires maintenance. Data replication software maintain a second copy of the application data, at either a local or remote site, and support either manual or automated failover to handle planned or unplanned server failures.

All of these products rely on redundant servers to provide availability. Applications can be moved to an alternate server if a primary server fails or requires maintenance. It is also possible to add redundant components within a server to reduce the chances of server failure.

Get Rid Of Failover – Get Rid Of Downtime

Most availability products rely on a recovery process called “failover” that begins after a failure occurs. A failover moves application processing to an alternate host after an unplanned failure occurs or by operator command to accommodate planned maintenance activity. Failovers are effective in bringing applications back online reasonably quickly but they do result in application downtime, loss of in-process transactions and in-memory application data, and expose the possibility of data corruption. Even a routine failover will result in minutes or tens of minutes of downtime including the time required for application restart and data recovery resulting from an unplanned failure. In the worst case, software bugs or errors in scripts or operational procedures can result in failovers that do not work properly; with the result that downtime can extend to hours or even days. Reducing the number of failovers, shortening the duration of failovers, and ensuring that the failover process is completely reliable, all contribute to reducing Exchange downtime.

Local server redundancy and basic failover address the most common failures that cause unplanned Exchange downtime. However, data loss or corruption, and site disruptions, although less common, can cause much longer outages and require additional solution elements to properly address.

Evaluate Unplanned Downtime Causes

Unplanned downtime can be caused by a number of different events:

-Catastrophic server failures caused by memory, processor or motherboard failures

-Server component failures including power supplies, fans, internal disks, disk controllers, host bus adapters and network adapters

-Software failures of the operating system, middleware or application

-Site problems such as power failures, network disruptions, fire, flooding or natural disasters

Each category of unplanned downtime is addressed in more detail below.

How to Avoid Server Hardware Failures

Server core components include power supplies, fans, memory, CPUs and main logic boards. Purchasing robust, name brand servers, performing recommended preventative maintenance, and monitoring server errors for signs of future problems can all help reduce the chances of failover due to catastrophic server failure.

Failovers caused by server component failures can be significantly reduced by adding redundancy at the component level. Robust servers are available with redundant power and cooling. ECC memory, with the ability to correct single-bit memory errors, has been a standard feature of most servers for several years. Newer memory technologies including advanced ECC, online spare memory, and mirrored memory provide additional protection but are only available on higher-cost servers. Online spare and mirrored memory can increase memory costs significantly and may not be cost effective for many Exchange environments.

Internal disks, disk controllers, host bus adapters and network adapters can all be duplicated. However, adding component redundancy to every server can be both expensive and complex.

Reduce Storage Hardware Failures

Storage protection relies on device redundancy combined with RAID storage to protect data access and data integrity from hardware failures. There are distinct issues for both local disk storage and for shared network storage.

Critical Moves To Protect Your Local Storage

Local storage is only used for static and temporary system data in a clustering solution. Data replication solutions maintain a copy of all local data on a second server. However, failure of unprotected local storage will result in an unplanned server failure, introducing the downtime and risks involved in a failover to an alternate server. For local storage, it is quite easy to add extra disks configured with RAID 1 protection. It is critical that a second disk controller is also used and that disks within each RAID 1 set are connected to separate controllers. Using other RAID levels, such as RAID 5, is not recommended for local disk storage the write cache is lost.

Secure Your Shared Storage

Shared storage depends on redundancy within the storage array itself. Fortunately, storage arrays from many storage vendors are available with full redundancy that includes disks, storage controllers, caches, network controllers, power and cooling. Redundant, synchronized write caches available in many storage arrays allow the use of performance-boosting write caching without the data corruption risks associated with single write caches. It is critical, however, that only fully-redundant storage arrays are used; lower-cost, non-redundant storage array options should be avoided.

Access to shared storage relies on either a fibre channel or Ethernet storage network. To assure uninterrupted access to shared storage, these networks must be designed to eliminate all single points of failure. This requires redundancy of network paths, network switches and network connections to each storage array. Multiple host bus adapters (HBAs) within each server can protect servers from HBA or path failures. Multipath IO software, required for supporting redundant HBAs, is available in many standard operating systems (including MPIO for Windows) and is also provided by many storage vendors; examples include EMC PowerPath, HP Secure Path and Hitachi Dynamic Link Manager. But these competing solutions are not universally supported by all storage network and storage array vendors, often making it difficult to choose the correct multipath software for a particular environment. This problem becomes worse if the storage environment includes network elements and storage arrays from more than a single vendor. Multipath IO software can be difficult to configure and may not be compatible with all storage network or array elements.

Say Goodbye to Networking Failures

The network infrastructure itself must be fault-tolerant, consisting of redundant network paths, switches, routers and other network elements. Server connections can also be duplicated to eliminate failovers caused by the failure of a single server component. Take care to ensure that the physical network hardware does not share common components. For example, dual-ported network cards share common hardware logic and a single card failure can disable both ports. Full redundancy requires either two separate adapters or the combination of a built-in network port along with a separate network adapter.

Software to control failover and load sharing across multiple adapters falls into the category or NIC teaming and includes many different options. Options include fault tolerance (active/passive operation with failover), load balancing (multiple transmit with single receive) and link aggregation (simultaneous transmit and receive across multiple adapters). Load balancing and link aggregation also include failover.

Choosing among these configuration options can be difficult and must be considered along with the overall network capabilities and design goals. For example, link aggregation requires support in the network switches and includes several different protocol options including Gigabit EtherChannel and IEEE 802.3ad. Link aggregation also requires that all connections be made to the same switch, opening a vulnerability to a switch failure.

Minimize Software Failures

Software failures can occur at the operating system level or at the Exchange application level. In virtualization environments, the hypervisor itself or virtual machines can fail. In addition to hard failures, performance problems or functional problems can seriously impact Exchange users, even while all of the software components continue to operate. Beyond proper software installation and configuration along with the timely installation of hot fixes, the best way to improve software reliability is the use of effective monitoring tools. Fortunately, there is a wide choice of monitoring and management tools for Exchange available from Microsoft as well as from third parties.

Reduce Operator Errors

Operator errors are a major cause of downtime. Proven, well-documented procedures and properly skilled and trained IT staff will greatly reduce the chance for operator errors. But some availability solutions can actually increase the chance of operator errors by requiring specialized staff skills and training, by introducing the need for complex failover script development and maintenance, or by requiring the precise coordination of configuration changes across multiple servers.

Secure Yourself from Site-Wide Outages

Site failures can range from an air conditioning failure or leaking roof that affect a single building, a power failure that affects a limited local area, or a major hurricane that affects a large geographic area. Site disruptions can last anywhere from a few hours to days or even weeks. While site failures are less common than hardware or software failures, they can be far more disruptive.

A disaster recovery solution based on data replication is a common to protect Exchange from a site failure while minimizing downtime associated with recovery. A data replication solution that moves data changes in real time and optimizes wide area network bandwidth will result in a low risk of data loss in the event of a site failure. Solutions based on virtualization can reduce hardware requirements at the backup site and simplify ongoing configuration management and testing.

For sites located close enough to each other to support a high-speed, low-latency network connection, solutions offering better availability with no data loss are another option.

Failover Reliability

Investments in redundant hardware and availability software are wasted if the failover process is unreliable. It is obviously important to select a robust availability solution that handles failovers reliably and to ensure that your IT staff is properly skilled and trained. Solutions need to be properly installed, configured, maintained and tested.

Some solution features that contribute to failover reliability include the following:

-Simple to install, configure and maintain, placing a smaller burden on IT staff time and specialized knowledge while reducing the chance of errors

-Avoidance of scripting or failover policy choices that can introduce failover errors

-Detection of actual hardware and software errors rather than timeout-based error detection

-Guaranteed resource reservation versus best-effort algorithms that risk resource over commitment

Protect Against Data Loss and Corruption

There are problems of data loss and corruption that require solutions beyond hardware redundancy and failover. Errors in application logic or mistakes by users or IT staff can result in accidentally deleted files or records, incorrect data changes and other data loss or integrity problems. Certain types of hardware or software failures can lead to data corruption. Site problems or natural disasters can result in loss of access to data or the complete loss of data. Beyond the need to protect current data, both business and regulatory requirements add the need to archive and retrieve historical data, often spanning several years and multiple types of data. Full protection against data loss and corruption requires a comprehensive backup and recovery strategy along with a disaster recovery plan.

In the past, backup and recovery strategies have been based on writing data to tape media that can be stored off-site. However, this approach has several drawbacks:

-Backup operations require storage and processing resources that can interfere with production operation and may require some applications to be stopped during the backup window

-Backup intervals typically range from a few hours to a full day, with the risk of losing several hours of data updates that occur between backups

-Using tape backup for disaster recovery results in recovery times measured in days, an unacceptable level of downtime for many organizations

Data replication is a better solution for both data protection and disaster recovery. Data replication solutions capture data changes from the primary production system and send them, in real time, to a backup system at a remote disaster site, at the local site, or both. There is still the chance that a system failure can occur before data changes have been replicated, but the exposure is in seconds or minutes rather than hours or days. Data replication can be combined with error detection and failover tools to help get a disaster recovery site up and running in minutes or hours, rather than days. Local data copies can be used to reduce tape backup requirements and to separate archival tape backup from production system operation to eliminate resource contention and remove backup window restrictions.

Consider Issues That Cause Planned Downtime

Hardware and software reconfiguration, hardware upgrades, software hot fixes and service packs, and new software releases can all require planned downtime. Planned downtime can be scheduled for nights and weekends, when system activity is lower, but there are still issues to consider. IT staff morale can suffer if off-hour activity is too frequent. Companies may need to pay overtime costs for this work. And application downtime, even on nights and weekends, can still be a problem for many companies that use their systems on a 24/7 basis.

Using redundant servers in an availability solution can allow reconfiguration and upgrades to be applied to one server while Exchange continues to run on a different server. After the reconfiguration or upgrade is completed, Exchange can be moved to the upgraded server with minimal downtime. Most of the work can be done during normal hours. Solutions based on virtualization, which can move applications from one server to another with no downtime, can reduce planned downtime even further. Be aware that changes to application data structures and formats can preclude this type of upgrade.

Added Benefits of Virtualization

The latest server virtualization technologies, while not required for protecting Exchange, do offer some unique benefits that can make Exchange protection both easier and more effective.

Virtualization makes it very easy to set up evaluation, test and development environments without the need for additional, dedicated hardware. Many companies cannot afford the additional hardware required for testing Exchange in a traditional, physical environment but effective testing is one of the keys to avoiding problems when making configuration changes, installing hot fixes, or moving to a new update release.

Virtualization allows resources to be adjusted dynamically to accommodate growth or peak loads. The alternative is to buy enough extra capacity upfront to handle expected growth, but this can result in expensive excess capacity. On the other hand, if the configuration was sized only for the short-term load requirements, growth can lead to poor performance and ultimately to the disruption associated with upgrading or replacing production hardware.

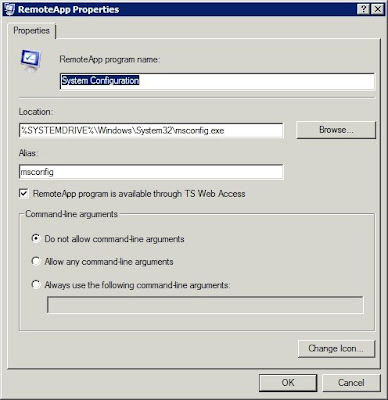

Figure B: The application’s properties sheet provides you with detailed information about the application that you have selected

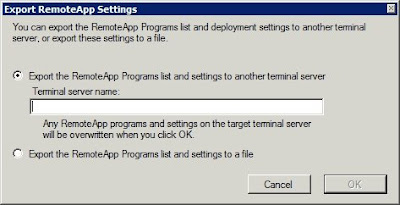

Figure B: The application’s properties sheet provides you with detailed information about the application that you have selected Figure C: The TS RemoteApp Manager allows you to export remote application settings to other servers

Figure C: The TS RemoteApp Manager allows you to export remote application settings to other servers